Here’s a (brief) summary of language model finetuning, the various approaches that exist, their purposes, and what we know about how they work.

以下是语言模型微调、现有各种方法、其目的以及我们对它们工作原理的了解的(简要)概述。

翻译:归功于亚历山德拉·弗朗西斯

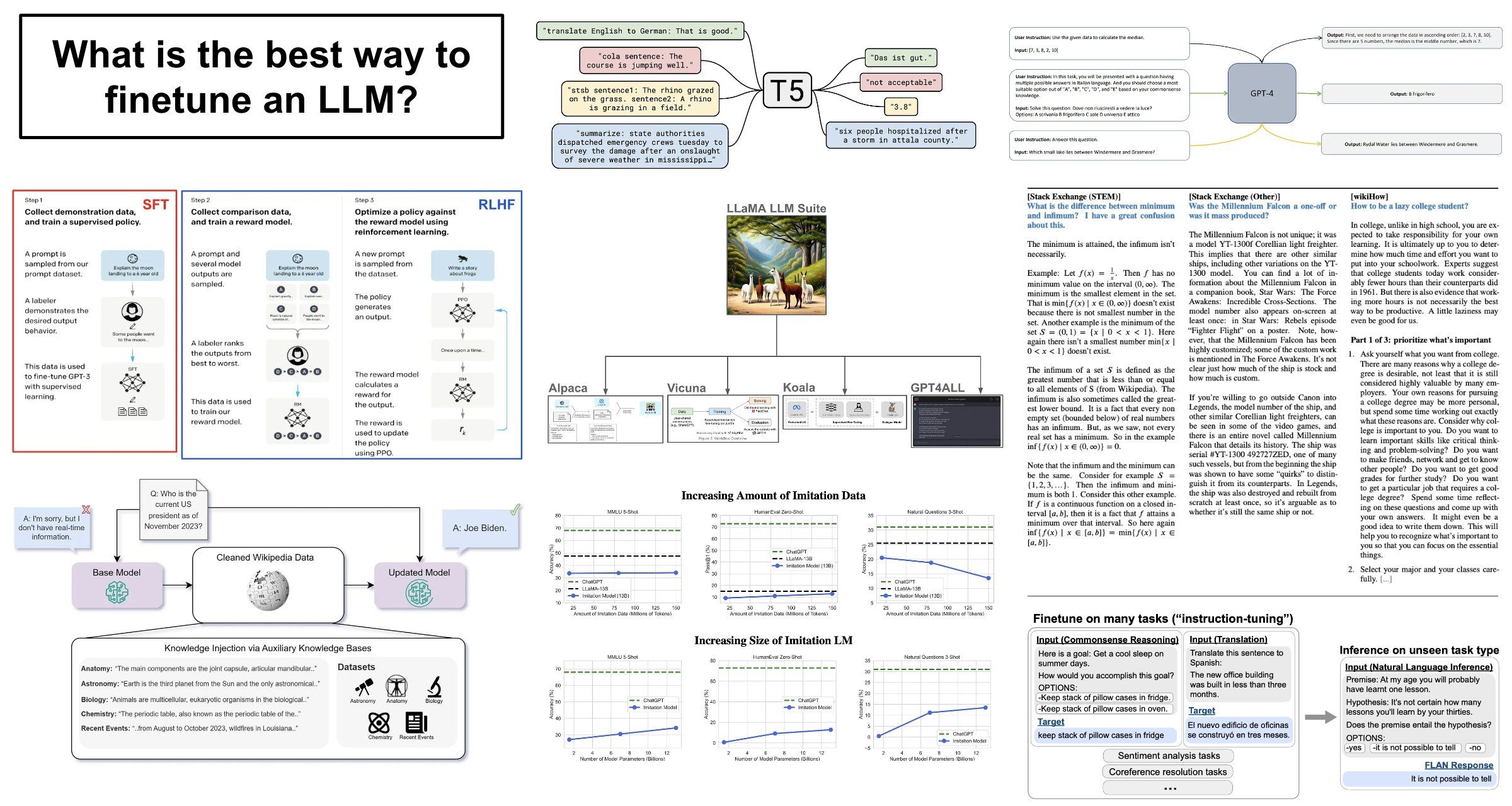

Fine-tuning techniques: The term “fine tuning” refers to further training a pretrained model. In the case of LLMs, this means that we take a pretrained foundation model and train it some more. But, there are so many different ways that this training can be done, which makes the concept of fine tuning incredibly vague. This single term can refer to a variety of different techniques, such as:

微调技术:* “微调”一词是指对预训练模型进行进一步的训练。在大规模语言模型(LLMs)的情况下,这意味着我们采用预训练的基础模型并对其进行了更多的训练。但是,进行这种训练的方式有很多种,这使得微调的概念非常模糊。这个单一的术语可以指的是各种不同的技术,如:

- Continued pretraining

持续预训练 - Instruction tuning

指令调优 - Supervised fine tuning (SFT)

监督微调(Supervised Fine Tuning,SFT) - Reinforcement Learning from Human Feedback (RLHF) or Direct Preference Optimization (DPO)

基于人类反馈的强化学习(RLHF)或直接偏好优化(DPO)

What is the goal of these techniques? For language models, there are two primary goals that a practitioner will have when performing fine tuning:

这些技术的目标是什么?*对于语言模型,在进行微调时,从业者会有两个主要目标:

- Knowledge injection: Teach the model how to leverage new sources of knowledge (not present during pretraining) when solving problems.

知识注入:*教会模型在解决问题时如何利用新的知识来源(在预训练期间不存在)。 - Alignment (or style/format specification): Modify the way in which the language model surfaces its existing knowledge base; e.g., abide by a certain answer format, use a new style/tone of voice, avoid outputting incorrect information, and more.

对齐(或样式/格式规范):* 修改语言模型展现其现有知识库的方式;例如,遵守特定的答案格式、使用新的风格/语气、避免输出错误信息等,以及其他。

Given this information, we might wonder: Which fine-tuning techniques should we use to accomplish either (or both) of these goals? To answer this question, we need to take a much deeper look at recent research on the topic of fine tuning.

基于此信息,我们可能会想:应该使用哪些微调技术来实现这两个目标中的任何一个(或两者都实现)?为了回答这个问题,我们需要深入探讨有关微调主题的最新研究。

Large-scale instruction tuning: Prior to the release of modern open-source LLMs, it was very common to fine tune pretrained LLMs on massive instruction tuning datasets. Such an approach was popularized by models like FLAN [1] (from Google), which perform instruction tuning of pretrained language models over large datasets. In the case of FLAN, for example, the FLANv2 instruction tuning dataset contains over 15M examples—very large! By following this approach, FLAN can learn to solve a large number of different downstream tasks in an efficient manner.

大规模指令微调:在开源大型语言模型发布之前,对预训练的大型语言模型进行大规模指令微调数据集微调是非常普遍的。这种方法被谷歌的FLAN等模型所普及。FLAN通过对预训练语言模型进行大规模数据集的指令微调。以FLAN为例,其FLANv2指令微调数据集包含超过15万个示例,规模很大!遵循这种方法,FLAN能够以高效的方式学习解决大量不同的下游任务。

“We show that by training a model on these instructions it not only becomes good at solving the kinds of instructions it has seen during training but becomes good at following instructions in general.” – from FLAN paper [1]

我们在这些指令上训练模型,不仅使其擅长解决训练期间遇到的各类指令,而且使其擅长遵循一般的指令。出自 FLAN 论文 [1]。

Beyond knowledge injection: After the proposal of ChatGPT, we saw an increase in the desire to align language models and adapt their output format to a particular style or structure. Such a goal is drastically different than teaching an LLM to solve a new task. When we are trying to teach an LLM new knowledge, more data is always better (hence the large instruction tuning datasets used by models like FLAN). However, aligning the language model to a certain style or structure of output does not require learning new information! So, maybe alignment-focused goals require less extensive fine tuning.

超越知识注入:在ChatGPT提出之后,我们看到了将语言模型对齐并适应其特定风格或结构的输出的愿望的增加。这样的目标与教授大型语言模型(LLM)解决新任务的目标截然不同。当我们试图向LLM传授新知识时,更多的数据总是更好(因此,像FLAN这样的模型会使用大量指令调整数据集)。但是,将语言模型对齐到某种风格或输出结构并不需要学习新信息!因此,可能聚焦对齐的目标需要较少的精细调整。

Less is more for alignment: Research on the topic of LLM fine tuning was catalyzed by the release of LLaMA [2] (and later LLaMA-2 [3]), which made high-quality foundation LLMs openly available. Quickly after LLaMA, authors from Meta published LIMA [4], which showed that alignment-style fine tuning can be accomplished with very little data. Namely, the goal of alignment is to adapt the LLM’s style (rather than to learn new information), which can be accomplished via a small, high-quality, and diverse fine tuning dataset. Such findings revealed that most of an LLM’s knowledge comes from pretraining, and the LLM learns the correct style during alignment (see quote below).

关于对齐,“少即是多”:LLM微调的话题研究是由LLaMA(版本二)[注](随后是LLaMA-版本三)[注]的发布催化的,使得高质量的基础LLM可以公开访问。继LLaMA之后不久,Meta公司的作者发布了LIMA,证明了通过对很少的数据进行微调即可完成对齐风格。具体地说,对齐的目标是为了适应LLM的风格(而不是学习新信息),这可以通过一个小型、高质量和多样化的微调数据集来实现。这些发现表明,大多数LLM的知识来自预训练阶段,而LLM在对其对齐的过程中学会了正确的风格(见下文引用)。

“A model’s knowledge and capabilities are learnt almost entirely during pretraining, while alignment teaches it which subdistribution of formats should be used when interacting with users.” – from LIMA paper [4]

模型的知识和能力几乎完全是在预训练过程中学到的,而对其的对齐则教会它在与用户交互时应使用哪种格式的子分布。出自 LIMA 论文[第 4 篇]。

Imitating proprietary LLMs: Following LIMA, a massive number of high-quality, fine tuned LLMs (e.g., Alpaca, Vicuna, Koala, Orca, and more) were created by fine tuning LLaMA over small synthetic fine tuning datasets of GPT-3.5/4 outputs. In this way, we could train these models to imitate the output of more powerful LLMs. When evaluated in human trials and on simplistic benchmarks, these models seemed to match (or exceed) the performance of powerful models like ChatGPT. For this reason, practitioners began to believe that we could surpass models like GPT-4 or ChatGPT by performing a small amount of (inexpensive) fine tuning.

模仿专有大型语言模型(LLMs):* 在LIMA之后,通过在小规模的合成微调数据集上微调LLaMA,产生了大量高质量的大型语言模型(例如Alpaca、Vicuna、Koala、Orca等)。通过这种方式,我们可以训练这些模型来模仿更强大LLMs的输出。在人类试验和简单的基准测试中,这些模型的性能似乎与ChatGPT等强大模型相匹配(或甚至超过它们)。因此,从业者开始相信,通过少量的(经济实惠的)微调,我们有可能超越像GPT-4或ChatGPT这样的模型。

What is going on here? Obviously, training a model like ChatGPT cannot be done this easily. Researchers quickly found some limitations in the work done on imitation models [5]:

这里发生了什么?*显然,像ChatGPT这样的模型训练不可能这么容易完成。研究人员很快发现了模仿模型工作中存在的一些局限性[5]:

– Humans are easily tricked if the style of the LLM is good, and (as shown by LIMA) these models can quickly learn to mimic the style of models like ChatGPT with little data.

如果大型语言模型的风格良好,人类很容易被欺骗,而且(如 LIMA 所示)这些模型可以迅速学会模仿像ChatGPT这样的模型的风格,所需数据很少。

– The benchmarks that were used are too limited. The models perform well when evaluated by a small group of humans, but their performance falls apart on more extensive benchmarks that include traditional, perplexity-based evaluations (e.g., normal NLP benchmarks).

所使用的基准测试过于局限。当使用一小群人进行评估时,这些模型表现良好,但在包括基于困惑度的传统评估(例如正常的NLP基准测试)的更广泛的基准测试中,它们的性能却大打折扣。

We can learn certain things (e.g., style and output format) from fine tuning over a small amount of data, but we can’t learn everything! These imitation models lack the knowledge base of more powerful LLMs, which can only be learned from large amounts of data.

我们可以通过微调少量数据来学习某些东西(例如风格和输出格式),但并不能学习所有东西!这些模仿模型缺乏更大规模语言模型的知识库,只有通过大量的数据才能真正学到知识。

Putting everything together: Given all of the information we’ve covered so far, there are a few takeaways that we can deduce:

整合所有信息:根据迄今为止我们讨论的所有内容,我们可以推断出以下几点结论:

- Most knowledge from an LLM comes from pretraining.

大多数来自LLM的知识都来自于预训练。 - We can perform fine tuning in the form of continued pretraining to expose the LLM to more (and new) data/knowledge.

我们可以通过持续预训练的方式进行微调,以暴露大型语言模型更多的(以及新的)数据/知识。 - Alignment-focused objectives can be achieved via fine tuning (SFT) on small, high-quality datasets. We don’t need tons of data to learn the style or format of output, only to learn new knowledge.

通过对高质量的小型数据集进行微调(SFT),可以实现以对齐为重点的目标。我们不需要大量的数据来学习输出样式或格式,只需要学习新知识即可。

When performing fine tuning, it’s very important that we know which goal—either alignment or knowledge injection—that we are aiming for. Then, we should put benchmarks in place that allow us to accurately and comprehensively assess whether that goal was accomplished or not. Imitation models failed to do this, which led to a bunch of misleading claims/results!

在进行微调时,了解我们要达成的目标——无论是校准还是知识注入——是非常重要的。然后,我们应该制定基准测试,以便能够准确全面地评估是否达到了这一目标。模仿模型未能做到这一点,导致出现大量误导性的说法/结果!

Ongoing work: The story doesn’t stop here! In fact, the distinction between pretraining and fine tuning is still quite vague. At what point does the LLM start actually learning new knowledge instead of just learning style/alignment? Many recent publications are continuing to study this question:

持续工作中:故事并未在这里结束!实际上,预训练和微调之间的区别仍然相当模糊。LLM 在什么时候开始真正学习新知识,而不仅仅是学习风格/对齐?许多最新出版物仍在继续研究这个问题:

- Finetuning vs. RAG [6]: authors find that continued pretraining is not super effective at knowledge injection, while RAG is actually highly effective at specializing an LLM to a new knowledge base.

微调与RAG[6]:作者发现持续预训练在知识注入方面并不非常有效,而RAG在将大型语言模型专门用于新知识库方面实际上非常有效。 - LIMIT [7]: authors from MosiacML/Databricks show that we can perform finetuning over a small mixture of instruction tuning and alignment-focused data, leading to a model that performs well in both NLP benchmarks and style-focused evaluations.

来自MosiacML/Databricks的作者在实验中指出,对指令调优数据和注重对齐的小规模混合数据集进行微调,可以得到一个在NLP基准测试和风格导向评估中都表现良好的模型。 - TULU [8]: authors subject finetuned LLMs to broader evaluations, finding that the quality of the base model has a massive impact on performance and that no one finetuning dataset/strategy yields the best results across all benchmarks.

图卢(TULU)[8]的研究表明,作者对微调的大型语言模型进行了更广泛的评估,发现基础模型的质量对性能有着巨大的影响,没有一个特定的微调数据集或策略能够在所有基准测试中产生最佳结果。 - TULU-2 [9]: authors show that finetuning LLMs over specific datasets leads to the model learning specific skills and domains of data. Finetuning works well if we make sure the finetuning dataset is highly relevant to the style/domain of evaluation we are using.

TULU-2 [9]:作者表明,针对特定数据集微调大型语言模型会导致模型学习特定技能和领域的数据。如果我们确保微调数据集与使用的评估风格/领域高度相关,那么微调的效果会更好。 - AlpaGasus [10]: authors directly study how much finetuning data is necessary for an LLM to perform well on various downstream tasks.

AlpaGasus [10]:作者直接研究对于大型语言模型在各种下游任务上表现良好需要多少微调数据。

Bibliography:

参考文献:

[1] Wei, Jason, et al. “Finetuned language models are zero-shot learners.” arXiv preprint arXiv:2109.01652 (2021).

[1] 魏,杰森等人提出:“微调语言模型是零射击学习者。”arXiv preprint arXiv: 2109.01652(2021年)。

[2] Touvron, Hugo, et al. “Llama: Open and efficient foundation language models.” arXiv preprint arXiv:2302.13971 (2023).

[2] 图夫龙 (Touvron)、雨果 (Hugo) 等人,《开源高效基础语言模型》(Llama),arXiv preprint arXiv:2302.13971(2023 年)。

[3] Touvron, Hugo, et al. “Llama 2: Open foundation and fine-tuned chat models.” arXiv preprint arXiv:2307.09288 (2023).

[3]Touvron,Hugo等人,“Llama 2:开放基础和微调聊天模型”,arXiv preprint arXiv: 2307.09288(2023年)。

[4] Zhou, Chunting, et al. “Lima: Less is more for alignment.” Advances in Neural Information Processing Systems 36 (2024).

周、春婷等人在《神经网络信息处理系统的进展》第36卷(2024年)中表示:“Lima:对齐越少越好。”

[5] Gudibande, Arnav, et al. “The false promise of imitating proprietary llms.” arXiv preprint arXiv:2305.15717 (2023).

标题:葛迪班德(Gudibande)、阿纳夫(Arnav)等人发表的论文:《模仿专有性对假设错误的希望》发表于arXiv预印版(arXiv preprint)arXiv:2305.15717(2023年)。

[6] Ovadia, Oded, et al. “Fine-tuning or retrieval? comparing knowledge injection in llms.” arXiv preprint arXiv:2312.05934 (2023).

[6]瓦迪亚,奥德等人提出,“微调还是检索?对比LLMs中的知识注入。” arXiv预印论文arXiv:2312.05934(年份:公元)。

[7] Jha, Aditi, et al. “LIMIT: Less Is More for Instruction Tuning Across Evaluation Paradigms.” arXiv preprint arXiv:2311.13133 (2023).

[7]贾哈等人,《LIMIT:在评估范式中调整指令的“更少即是更多”原则》。arXiv preprint arXiv: 23年xx月xx日论文。

[8] Wang, Yizhong, et al. “How far can camels go? exploring the state of instruction tuning on open resources.” Advances in Neural Information Processing Systems 36 (2024).

[8]王义忠等人:“骆驼能走多远?探索开放资源上的指令调整状态。” 《神经网络信息处理系统进展》第36卷(2024年)。

[9] Ivison, Hamish, et al. “Camels in a changing climate: Enhancing lm adaptation with tulu 2.” arXiv preprint arXiv:2311.10702 (2023).

[9]伊维森(Ivison)、哈米什(Hamish)等人,《骆驼在气候变化中的适应:通过图卢2增强骆驼适应性的研究》,arXiv preprint arXiv:2311.10702(2023年)。

[10] Chen, Lichang, et al. “Alpagasus: Training a better alpaca with fewer data.” arXiv preprint arXiv:2307.08701 (2023).

陈立昌等人提出:“Alpagasus:用更少数据训练更好的羊驼模型”。这是来自arXiv preprint的预印版本论文编号arXiv:2307.087 共有八项引人注目的信息研究成果摘要包括先进的小型羊驼模型训练技术。

© 版权声明

文章版权归作者所有,未经允许请勿转载。

相关文章

暂无评论...