文档主要论述了大语言模型训练奖励模型中结果和过程监督的比较、比较中采用的方法以及研究的贡献

What are hallucinations in large language models and why are they problematic in domains that require multi-step reasoning?

什么是大型语言模型中的幻觉,为什么它们在需要多步推理的领域中存在问题?

什么是大型语言模型中的幻觉,为什么它们在需要多步推理的领域中存在问题?

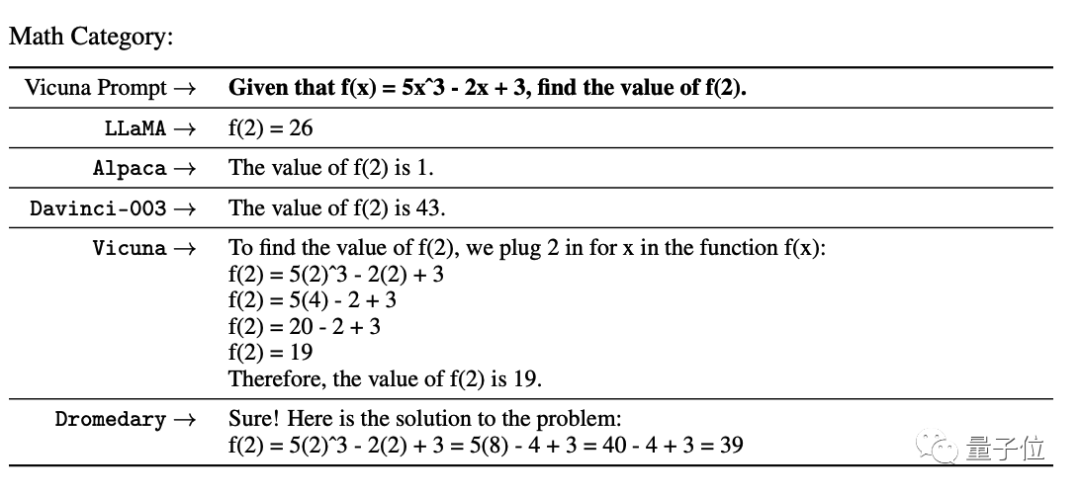

Hallucinations in large language models refer to the tendency of these models to invent facts or generate false information in moments of uncertainty. They are problematic in domains that require multi-step reasoning because a single logical error can derail a much larger solution. This can lead to incorrect or unreliable outputs, which can be detrimental in applications that require accurate and reliable reasoning.大型语言模型中的幻觉是指这些模型在不确定的时刻发明事实或产生虚假信息的倾向。它们在需要多步推理的领域中存在问题,因为单个逻辑错误可能会破坏更大的解决方案。这可能会导致不正确或不可靠的输出,这在需要准确可靠的推理的应用中可能是有害的。

What are outcome-supervised reward models (ORMs) and process-supervised reward models (PRMs), and what are the advantages of process supervision over outcome supervision?

什么是结果监督奖励模型(ORM)和过程监督奖励模型(PRM),过程监督相对于结果监督的优势是什么?

什么是结果监督奖励模型(ORM)和过程监督奖励模型(PRM),过程监督相对于结果监督的优势是什么?

Outcome-supervised reward models (ORMs) are trained using only the final result of the model’s chain-of-thought, while process-supervised reward models (PRMs) receive feedback for each step in the chain-of-thought. Process supervision provides more precise feedback, since it specifies the exact location of any errors that occur. It also has several advantages relevant to AI alignment: it is easier for humans to interpret, and it more directly rewards models for following a human-endorsed chain-of-thought. Within the domain of logical reasoning, models trained with outcome supervision regularly use incorrect reasoning to reach the correct final answer, while process supervision has been shown to mitigate this misaligned behavior.

结果监督奖励模型 (ORM) 仅使用模型思维链的最终结果进行训练,而过程监督奖励模型 (PRM) 接收思维链中每个步骤的反馈。过程监督提供更精确的反馈,因为它指定了发生的任何错误的确切位置。它还具有与AI对齐相关的几个优点:人类更容易解释,并且更直接地奖励遵循人类认可的思维链的模型。在逻辑推理领域,用结果监督训练的模型经常使用不正确的推理来得出正确的最终答案,而过程监督已被证明可以减轻这种错位行为。

结果监督奖励模型 (ORM) 仅使用模型思维链的最终结果进行训练,而过程监督奖励模型 (PRM) 接收思维链中每个步骤的反馈。过程监督提供更精确的反馈,因为它指定了发生的任何错误的确切位置。它还具有与AI对齐相关的几个优点:人类更容易解释,并且更直接地奖励遵循人类认可的思维链的模型。在逻辑推理领域,用结果监督训练的模型经常使用不正确的推理来得出正确的最终答案,而过程监督已被证明可以减轻这种错位行为。

阅读原文:

© 版权声明

文章版权归作者所有,未经允许请勿转载。

相关文章

暂无评论...